ML & DL — Development Environment (Part 1)

What is machine learning, description of the development environment, and workflow implementation with Keras.

In this article, you will find:

- Basic and mathematical notions in machine learning,

- The development environment and a brief introduction to TensorFlow, Keras, Python and Jupyter Notebook, and

- The workflow in Keras for upcoming deployments.

Basic notions

To understand deep learning, you need to have a solid understanding of the basic principles of machine learning[1].

Machine learning is all about creating an algorithm that can learn from the data to make a prediction[1].

Machine learning can be classified in [1]:

- Supervised: data set of characteristics, associated with a label or target,

- Unsupervised: data set of many characteristics that learn useful properties of the structure of that data set, and

- For reinforcement: algorithms interact with an environment, so there is a feedback loop between the learning system and its experiences.

Mathematical notions

Four major mathematical disciplines make up machine learning:

- Statistics is the core of everything. It tells us what our goal is.

- Calculus tells us how to learn and optimize our model.

- Linear algebra makes the execution of algorithms viable on massive data sets.

- Probability theory helps to predict the possibility of an event occurring.

Development environment

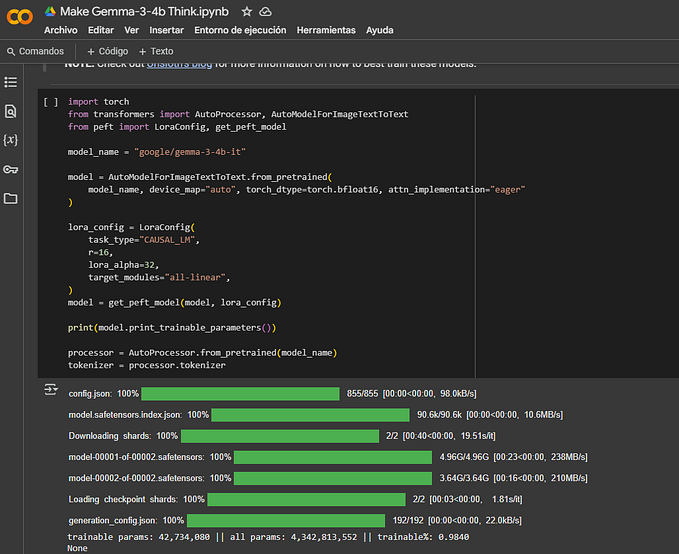

The development environment will be used as one of the main frameworks for machine learning and deep learning, along with programming in Python, in Jupyter Notebook environment.

Frameworks operate on 2 levels of abstractions:

- Low Level — mathematical and primitive operations of networks neural processes are implemented (TensorFlow, PyTorch).

- High Level — low-level primitives are used to implement abstractions of neural networks, such as models and layers (Keras).

TensorFlow

- Google’s open-source software library for high-performance numerical computing.

- Flexible architecture across multiple platforms CPUs, GPUs, TPUs, and from desktops to mobile devices.

Read more about TensorFlow.

Keras

- High-level neural network API, written in Python.

- Works for CPU and GPU.

- Allow rapid experimentation.

Read more about Keras.

Python

- High-level interpreted programming language.

- Packages like NumPy and Matplotlib.

Read more about Python.

Jupyter Notebook

- An open-source web application that allows you to create and share documents that contain live code, equations, visualizations, and narrative text.

- Support different languages, including Python, R, Julia, and Scala.

Read more about Jupyter.

Conda Environment

- Conda is an open-source package management system and environment management system.

- Conda quickly installs, runs, and updates packages and their dependencies.

Read more about Conda.

Workflow

Workflow in Keras:

Model training and evaluation:

- Load Data

- Define Model

- Compile Model

- Fit Model

- Evaluate Model

1. Load Data

- Load data (training and testing set):

X_train, y_train = load_data_train()X_test, y_test = load_data_test()

- View data, and

- Pre-process data.

2. Define Model

Two models: Sequential and Functional API.

- Sequential used to stack layers:

model.add()used to add the layers.input_shape =()specify the input form.

model = keras.models.Sequential()model.add(layer1 …, input_shape=(nFeatures))model.add(layer2 … )

3. Compile model

Configure the learning process by specifying:

Optimizerwhich determines how weights are updated,Cost functionor loss function,Metricsto evaluate during training and testing.

model.compile(optimizer='SGD', loss='mse', metrics=['accuracy'])

4. Fit model

Start the training process.

batch_size: divide the data set into a number of batches.epochs: number of times the data set is trained completely.

model.fit(X_train, y_train, batch_size=500, epochs=1)

5. Evaluate model

Evaluate the performance of the model.

model.evaluate()finds the specified loss and metrics, and it provides a quantitative measure of accuracy.model.predict()finds the output for the provided test data and it is useful to check the outputs qualitatively.

history = model.evaluate(X_test, y_test)y_pred = model.predict(X_test)

For those looking for all the articles in our ML & DL series. Here is the link.

References

[1] Goodfellow, I., Bengio, Y., and Courville, A. (2016). Deep Learning. MIT Press.