GANs — Deep Convolutional GANs with CIFAR10 (Part 8)

Brief theoretical introduction to Deep Convolutional Generative Adversarial Networks or DCGANs and practical implementation using Python and Keras/TensorFlow in Jupyter Notebook.

In this article, you will find:

- Research paper,

- Definition, network design, and cost function, and

- Training DCGANs with CIFAR10 dataset using Python and Keras/TensorFlow in Jupyter Notebook.

Research Paper

Radford, A., Metz, L., & Chintala, S. (2015). Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434.

Deep Convolutional Generative Adversarial Networks — DCGANs

The difference between the simple GAN and the DCGAN, is the generator of the DCGAN uses the transposed convolution (Fractionally-strided convolution or Deconvolution) technique to perform up-sampling of 2D image size.

DCGAN are mainly composes of:

- Convolution layers without max pooling or fully connected layers.

- It uses convolutional stride and transposed convolution for the downsampling and the upsampling.

Read more about GANs:

Read more about Convolutional neural networks — CNN:

Network design

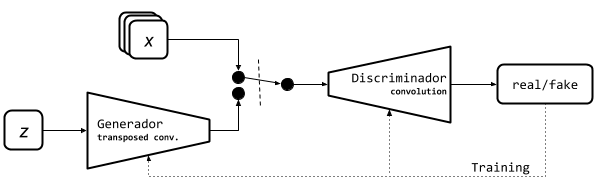

x is the real data and z is the latent space.

Cost function

Training DCGANs

- Data: CIFAR10 dataset

(X_train, y_train), (X_test, y_test) = cifar10.load_data()2. Model:

- Generator

generator = Sequential()# FC: 2x2x512

generator.add(Dense(2*2*512, input_shape=(latent_dim,), kernel_initializer=init))

generator.add(Reshape((7, 7, 128)))# Conv 1: 4x4x256

generator.add(Conv2DTranspose(256, kernel_size=3, strides=2, padding='same'))

generator.add(BatchNormalization(momentum=0.8))

generator.add(ReLU(0.2))# Conv 2 and Conv 3

...# Conv 4: 32x32x3

generator.add(Conv2DTranspose(3, kernel_size=5, strides=2, padding='same', activation='tanh'))

- Discriminator

# Discriminator network

discriminator = Sequential()# Conv 1: 16x16x64

discriminator.add(Conv2D(64, kernel_size=5, strides=2, padding='same',

input_shape=(img_shape), kernel_initializer=init))

discriminator.add(LeakyReLU(0.2))

# Conv 2, 3 and 4

...# FC

discriminator.add(Flatten())

# Output

discriminator.add(Dense(1, activation='sigmoid'))

3. Compile

discriminator.compile(Adam(lr=0.0003, beta_1=0.5), loss='binary_crossentropy', metrics=['binary_accuracy'])discriminator.trainable = False

z = Input(shape=(latent_dim,))

img = generator(z)

decision = discriminator(img)

d_g = Model(inputs=z, outputs=decision)

d_g.compile(Adam(lr=0.0004, beta_1=0.5), loss='binary_crossentropy',

metrics=['binary_accuracy'])

4. Fit

# Train Discriminator weights

discriminator.trainable = True

# Real samples

X_batch = X_train[i*batch_size:(i+1)*batch_size]

d_loss_real = discriminator.train_on_batch(x=X_batch, y=real * (1 - smooth))

# Fake Samples

z = np.random.normal(loc=0, scale=1, size=(batch_size, latent_dim))

X_fake = generator.predict_on_batch(z)

d_loss_fake = discriminator.train_on_batch(x=X_fake, y=fake)

# Discriminator loss

d_loss_batch = 0.5 * (d_loss_real[0] + d_loss_fake[0])

# Train Generator weights

discriminator.trainable = False

d_g_loss_batch = d_g.train_on_batch(x=z, y=real)5. Evaluate

# plotting the metrics

plt.plot(d_loss)

plt.plot(d_g_loss)

plt.show()DCGANs — CIFAR10 results

Train summary

Github repository

Look the complete training DCGAN with CIFAR10 dataset, using Python and Keras/TensorFlow in Jupyter Notebook.

For those looking for all the articles in our GANs series. Here is the link.